AI Consciousness Unfolded

How do we know if artificial intelligences are unfeeling algorithms or conscious beings that experience sensations and emotions? The answer to this has major implications on what ethical guidelines we apply to AIs. If we believe that a future AI experiences pain and joy, we'd treat it differently than we'd treat a simple algorithm like an Excel formula. Can we know what an AI is experiencing? Theories of consciousness aim to say what leads to consciousness and could help determine if AIs, animals, or even trees are conscious.

One of the foremost theories, Integrated Information Theory (IIT), is unique in that it comes with an equation for calculating how conscious any given thing is. A human brain scores very high while a pile of rocks scores zero. Electronic circuits from your kitchen lights to ChatGPT can have a wide range of scores. Regarding the latter and other artificial intelligences, IIT makes an interesting prediction: AIs built from complex, looping architectures will have at least some consciousness while those from linear, feedforward networks (ChatGPT included) will have zero consciousness.

If this theory can be proven true, it will be immensely useful for the future of AI ethics, not to mention for understanding human consciousness. Already, the theory is being used to predict if a patient in a vegetative state is truly unconscious or merely locked-in, unable to move yet perceptive of their surroundings. However, the provability of Integrated Information Theory has come into question recently, with a 2019 paper titled The Unfolding Argument. The argument doesn't say that IIT must be false, but rather that it can never be proven true. It hinges on the fact that IIT predicts different levels of consciousness for networks that are shaped differently but behave identically.

To fully understand the argument and what it means for our understanding of consciousness, let's dive into consciousness, IIT, recurrent versus feedforward networks, and the unfolding argument.

What is consciousness

To understand a theory like IIT, we need to be clear about what we mean by the word ‘consciousness'. Consciousness, in this context, is your subjective experience of the world: the experience of sights, sounds, feelings, and thoughts that is unique to your first-person perspective. It is what goes away when you fall asleep and returns when you wake up (or dream).

Compare yourself with a robot that has cameras for eyes, microphones for ears, and a speaker for a mouth. You probably have one of these robots in your pocket right now, if not in your hand. Both you and the robot process external data and turn this into actions, but only you experience these sights and sounds. Your phone, presumably, has no internal world; it loses no experience when powered off. You can take a photo of a sunset with your phone, but it doesn't consciously see the sunset in the way you do.

What is it about the three-pound gelatinous lump in your skull that brings about your unique consciousness? Why don't we process data without experiencing it, as empty inside as our phones? Theories of consciousness such as IIT aim to answer these questions.

Integrated Information Theory

Integrated Information Theory (IIT) is one of the leading scientific theories of consciousness today. IIT says that the "right" configuration of elements in a system leads to conscious experience. These elements could be neurons in a brain, transistors in a computer, or even trees in a forest.

IIT is unique among theories of consciousness in that it provides a mathematical equation for calculating a quantity that it says equates to consciousness. This quantity, called integrated information and denoted by the Greek letter Φ (phi), is calculated based on the current state of the system and how this state changes over time. A system (whether a brain or an artificial network built of silicon) that has high integrated information (Φ) experiences consciousness, according to IIT, and one with no Φ has no subjective experience.

The math behind IIT is quite involved, and we won't attempt to fully understand it here (but see this visual explanation by the authors of IIT). For the sake of the unfolding argument, though, it's important to know a little about IIT's math and its implications. Let's first understand what integrated information is.

In the context of IIT, information is a measure of how much a group of elements tells you about the rest of the system. If a group of neurons has high information, the state of these neurons will tell you a lot about the previous and future states of the whole brain. Integration is a measure of how much this information relies on the group of neurons being a unified group rather than a collection of disconnected neurons. If a group of neurons called group AB has 100 units of information about the brain, but group A and group B have 50 units each when taken separately, group AB has no integrated information.

The last facet of IIT we need to understand is that the equation for Φ requires information about both the past and the future states of the system. When measuring Φ, we look first at how much a group of neurons tells us about the previous state of the whole brain, then at how much it tells us about the future state of the brain, and then we take the lesser of these two values. A group of neurons can only contribute to consciousness if it has information about both the past and future states of the system.

This fact is critical to the unfolding argument because it means that feedforward networks have no integrated information. Let's see why.

Recurrent and feedforward networks

A recurrent network has a looping architecture, such that any neuron can connect to any other neuron, including itself or "upstream" neurons that connect to it. A feedforward network, on the other hand, processes information in a single direction, meaning that every neuron gets information from upstream neurons and passes it downstream; information never flows back to earlier neurons.

Why does a feedforward network necessarily have a Φ of zero? In a feedforward network, the earliest neurons will know nothing about the past state of the system (because no neurons connect back to them) and the furthest downstream neurons will know nothing about the future state of the system (because they connect to no further neuron). Since integrated information is the minimum of the past and future information, a feedforward network has zero integrated information and zero consciousness, according to IIT.

The unfolding argument centers on the fact that any recurrent neural network can be unfolded into a feedforward neural network that behaves the same as the recurrent network. The feedforward network will likely require many more neurons than its recurrent counterpart, but it can mimic the behavior of the recurrent network all the same. When we say behavior, we are talking about the "input-output" function of the network. For any given input, our network will have a specific output. For instance, an artificial neural network may take a recording of speech as its input and output a string of text. These speech-to-text algorithms (which you use when texting via voice) are traditionally recurrent neural networks but could be implemented as a feedforward network, with identical results.

One way we know that any recurrent network can be unfolded into a feedforward network is that all neural networks are "universal function approximators". This means that, given enough neurons and layers, a neural network can approximate any input-output function. Michael Nielsen has written a delightful interactive proof here. It doesn't matter if we allow our network to have recurrent connections or require it to be a feedforward network with only one-directional connections, our network can approximate any input-output function. This means, given any recurrent network, we can create a feedforward network with the same input-output function.

Unfolding Networks

Let's see how we can unfold a very simple recurrent network. Take the following network of four neurons. The next state of each neuron is calculated from the neurons connected to it. This is a recurrent network because some neurons get information from "downstream". For instance, node B depends not just on node A but also on node C. The animation below shows how this recurrent network turns inputs into outputs.

Although there is no end to the ways we can unfold this into a feedforward network, let's look at one simple way. The following network "stores" the previous inputs in a buffer and then uses this history to calculate the output. No recurrent connections are needed; no neuron connects back to a previous neuron.

Note that only the first five outputs match that of our recurrent network. This is because we've only unfolded the network a small amount. If we unfold it to a larger network with a longer buffer, we match more of the outputs.

Although this simple unfolding only mimics the input-output function for a limited duration, the authors of the unfolding argument declare: "Well-known mathematical theorems prove that, for any feedforward neural network (Φ = 0), there are recurrent networks (Φ > 0) that have identical i/o functions and vice-versa."

Let's take our simple networks and use IIT to calculate the amount of integrated information in each. Using the IIT python library PyPhi, we see exactly what we'd expect: the recurrent network has a non-zero amount of integrated information (Φ = 1.5) and the feedforward network has zero integrated information (Φ = 0). IIT predicts consciousness in the recurrent network but not in the feedforward network, despite their identical behavior. You can run the calculations yourself in this notebook.

If these remarkably brief overviews of IIT and neural networks haven't left you feeling like an expert in the field, don't worry. The only thing you need to take away from the previous sections is that any network can be altered so that its behavior doesn't change but its level of consciousness, as predicted by IIT, does.

The unfolding argument

We've seen why IIT predicts consciousness for recurrent networks and not feedforward networks, and we've seen how recurrent networks can be recreated as feedforward networks with identical behavior, but what does all this mean for IIT?

Consider the brain: a highly recurrent neural network with a high degree of integrated information. The unfolding argument asks us to unfold this recurrent neural network into a feedforward neural network that has the same input-output function. We can think of the input-output function of the brain as how sensory inputs (light hitting your eyes or air pressure waves entering your ear) lead to motor outputs (the contraction of muscles leading to movement or the production of speech). Although it's a very complicated input-output function, it can be recreated by a feedforward neural network. What are the implications of theoretically being able to create a non-conscious brain that acts identically to a conscious brain?

Consider a research participant in a study that aims to validate IIT. Perhaps we are showing the participant images for a very short amount of time and asking the participant if they were conscious of the image. To validate IIT, we look at their brain activity and may hope to see higher integrated information in their visual cortex when they report seeing the image compared to when they were not conscious of the image, or else some other data about their brain state that validates IIT's predictions about consciousness. (Note that calculating the integrated information of the entire brain is unfeasible because of the size of the network, and in reality proxy measures stand in for Φ.)

By looking at the integrated information in the participant's brain and correlating this to their report about their conscious experiences, we can attempt to validate a theory like IIT. All is well so far, but here comes the crux of the unfolding argument.

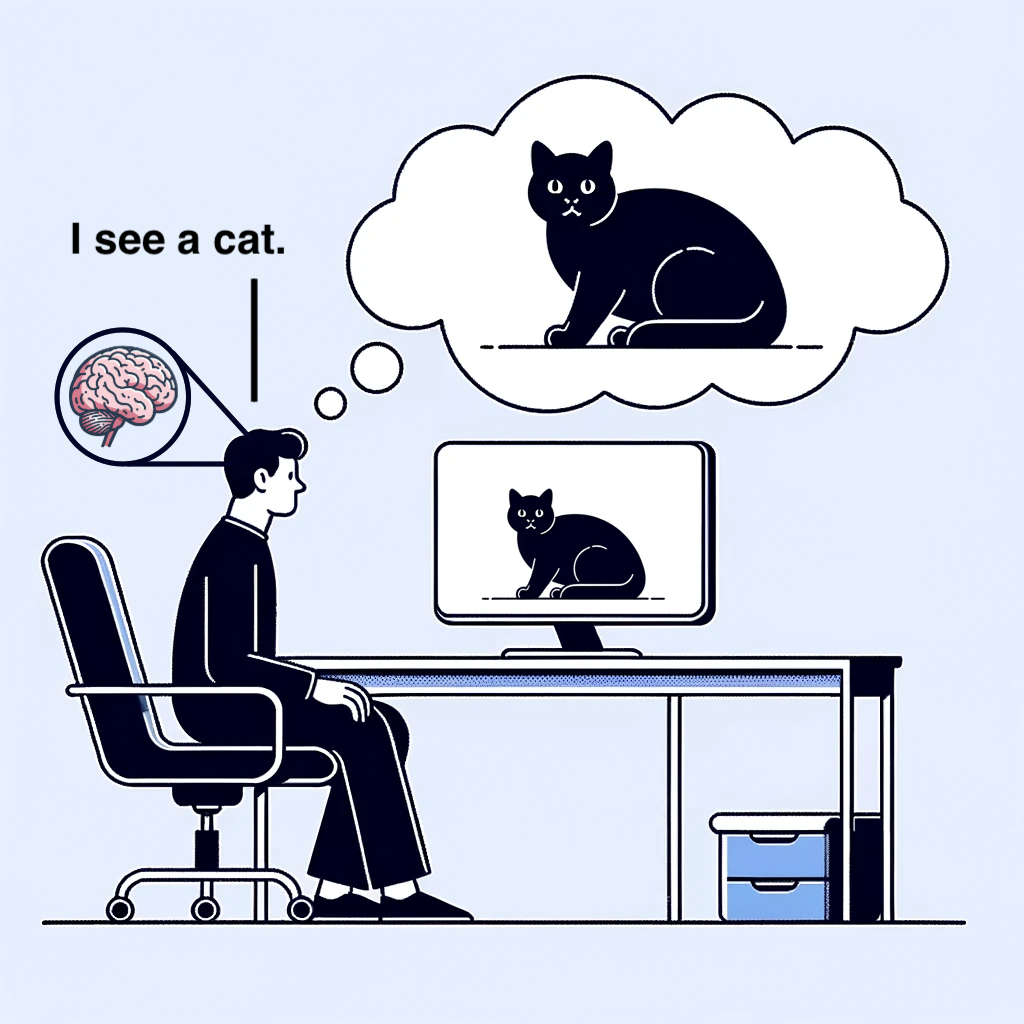

Repeat the same experiment, but this time unfold the brain of the participant into a feedforward network. This feedforward brain will behave identically to the original brain but, according to IIT, has no conscious experience. Since the unfolded brain has the same input-output function as the original brain, our new participant will behave exactly the same as our original participant. If our original participant would have said "I see a cat", so too will our unfolded participant, despite having no integrated information.

There are two ways to interpret this result. The first is that IIT is false; consciousness is not integrated information. After all, the participant is telling you they are conscious of the image, and yet their brain has no integrated information.

The second interpretation, equally valid, is that IIT is true and that the participant's report is false. After all, there is no guarantee that their verbal report matches their internal experience. We can't directly know what their internal experience is, so the best we can do is infer it from their report, which may be a lie. In fact, if IIT is true, there are guaranteed to be cases where someone's report does not line up with their internal experience since we can change the amount of integrated information in their brain without changing their behavior.

In the first interpretation, IIT is false. In the second, it is true but cannot be proven false, because there is no way to correlate the participant's report of their consciousness with IIT's prediction about their consciousness. The only way for us to scientifically confirm that IIT is correct is to see that someone's consciousness changes as their integrated information changes, but we can't directly see that their consciousness is changing; we can only rely on their reports. You might say that we can assume their consciousness is changing because their integrated information is changing, but we can only reach that conclusion once IIT is proven to be true. The only way to prove IIT is true is to use circular logic, assuming it is already true.

To summarize, there is no way to prove IIT is true because we must rely on someone's report about their internal experience in order to correlate their consciousness with their brain state, but we can't trust that their report correctly represents their consciousness. If only we could find a way to directly know the participant's internal experience without relying on their possibly faulty reports.

The unique participant

There's something special about you. You, and you alone, have access to your internal experience. Okay, it's true, everyone else has access to their own internal experience – but if you're looking for a participant for your study whose experience you have direct access to, someone whose verbal reports you don't need to rely on, there's only one person for the job: you. Can putting yourself in the seat of the participant save IIT from the unfolding argument?

The authors of the argument say no, because this wouldn't be scientific. Science relies on collecting data that can be shared with other scientists, and if your "data" is your internal experience, then this is not scientific, shareable data.

Let's accept this argument, that IIT cannot be proven scientifically, and move past it. What if you could prove IIT even if only to yourself? Sure, it won't get you any scientific publications, but you'd know whether IIT accurately predicted your conscious behaviors.

Let's follow a thought experiment where you rely only on your own internal experience while trying to validate IIT. You're going to answer one very brief question: "Are you conscious?" Go ahead and do this part of the experiment now. Are you conscious? Do you have an internal, subjective experience? What is it like to answer that question? Perhaps you look around, consider your surroundings, confirm that you are experiencing them, and then answer ‘yes'. In our thought experiment, you'll answer the question, then we'll unfold your brain and you'll answer it again.

Since this is only a thought experiment and not an NSF grant, let's propose some sci-fi technology that could, in theory, be used to unfold your recurrent network of a brain. First, we use a harmless virus to deliver nanobots to each of your neurons, nanobots that have the capability to read the activity of the neuron as well as change that activity. The nanobots have wireless functionality that allow them to send and receive information to and from any other nanobot or a central controller. Next, we grow brain tissue in a very large Petri dish and inject those neurons with nanobots too. This way, we can create artificial connections between neurons: a neuron can receive inputs from other neurons via the wireless nanobot connection, rather than relying on physical synapses. Our virtual synapses and large amount of external neurons mean we can remove any unwanted connections in your brain and create a larger network connected any way we'd like. We still have a brain made up of organic neurons, but now we can adjust this network to be a feedforward network with the same input-output function (given a large enough Petri dish of extra neurons). At the press of a button, the research assistant can switch your brain from its original, recurrent architecture to an unfolded feedforward one.

As mentioned, you'll answer the question "Am I conscious?" both before and after this switch. If IIT is correct, you will be unconscious when answering the question with an unfolded brain. One thing is certain though: your answer to the question won't change. Since your unfolded brain is designed to have the same input-output function as your original brain, your answer to the question while your brain is unfolded will be guaranteed to match whatever answer you would have given if your brain had not been switched. Your answer won't depend on whether your brain is folded or unfolded; your answer is independent of the integrated information in your brain.

Your research assistants won't know if your internal experience changed since your behavior hasn't changed, but that's why you put yourself in the seat. You have access to this internal experience and can confirm if IIT correctly predicts that you've lost consciousness. Of course, if you have lost consciousness, it'll be hard for you to know this. You can't be conscious of being unconscious. To further the issue, you couldn't even reset your brain to its original form and expect to get any useful answers. Because your input-output function has never changed, your answer when asked "Were you actually conscious when we unfolded your brain, or were you just saying that?" is going to be the same as if your brain had never been unfolded. You may even have memories of having answered the original question in the moment, despite not having been conscious at the time.

Let's assume for a moment that IIT is incorrect, and your consciousness changes not one bit after the unfolding. Both your behavior and your internal experience are left untouched. When asked "Are you conscious?", you'd reply "Yes, I certainly am, IIT is incorrect! I feel no different than before you unfolded my brain." Note, though, that this is exactly what you'd say even if IIT was correct. How can we be so certain? Hopefully, the answer is obvious by now: your input-output function won't have changed, and neither will your answers.

Let's avoid making you fully unconscious. The authors of the unfolding argument make an interesting point alongside their central argument. IIT doesn't just predict how much a system is conscious, but also what it is conscious of. It turns out we can hack this: instead of unfolding your brain, we're going to adjust the network such that you're not conscious of the image of a cat in front of you but instead see an image of a flower. Once again, we can make this change without changing the input-output function of the network. Your behavior isn't affected, but your internal experience is different.

We show you the cat, turn your brain into "perceive flower" mode, and ask you what you're conscious of. You'll certainly say you see a cat since this is what you would have said if we hadn't messed with your brain. But, on the inside, you'd be seeing a flower. Would you know, then, that IIT was wrong? Perhaps, but you could never do anything with that information. You can wish all you want that you'd jump up and tell the research assistants "Wait a second, I actually saw a flower, IIT is correct!" But if that's not how you'd act with your brain in its original state, experiencing a cat, then it's not how you'd act now. Again, if you reset your brain to its original state, hoping you could inform everyone of what you actually experienced, you'd be disappointed. Your behavior would continue exactly as if you had seen the cat all along, and I suspect you'd even believe this was the case. Your conscious experience, according to IIT, can be fully uncoupled from your behavior.

So even if you can adjust your own integrated information in the hopes of confirming IIT without relying on third-person reports, you could at best know the result internally without ever being able to act on it. It seems that relying on your own first-person experience cannot save IIT.

The unfolding argument boils down to this: science relies on collecting data from experiments, and in consciousness science this data includes a person's report about their own conscious experience. However, these reports do not depend on integrated information; the same reports about consciousness can be collected while the amount of integrated information changes, or vice versa. Whether or not consciousness changes as integrated information changes, reports about consciousness are decoupled from integrated information, and thus we cannot know that consciousness and integrated information are changing together. Therefore, we cannot prove that IIT is true.

After the unfolding

The unfolding argument has provided a strong reason to believe that Integrated Information Theory is not provable. What does this mean for the future of consciousness studies? And what are the implications if IIT is, in fact, true?

The unfolding argument hinges on the fact that a system's Φ (which IIT says equates to consciousness) and that system's behavior are independent: one can change while the other stays the same. The same argument applies to any theory that defines a measure of consciousness which is independent of behavior. Why do we focus on IIT? Because IIT is the only theory to provide a quantitative measure of consciousness. Other theories provide frameworks and concepts that suggest what brings about consciousness, but only IIT provides an equation to calculate consciousness; only IIT is formalized. It is this bold and pioneering step that allows us to apply logic such as the unfolding argument to it.

It is important to remember that the unfolding argument does not disprove IIT. In the unfolding argument's thought experiment, there were two interpretations: IIT is false or IIT is true but not provable. Could the laws of the universe that dictate how consciousness arises really be unprovable? Certainly. The universe is not obliged to contain only provable laws.

If IIT is correct and the amount of integrated information in a system is equivalent to consciousness, what then? We would never be able to prove this fact, but we would see scientific results that support it. In fact, we already do. For instance, take two parts of the brain: the cerebellum and the cerebral cortex. The cerebellum has about three-quarters of the brain's neurons, but lacking one makes almost no difference to one's subjective experience (the owner may notice some coordination issues). Remove a pinky nail-sized chunk of cortex, though, and you can expect to see drastic changes in conscious experience.

The architecture of the cerebellum is such that, despite its high number of neurons, it has very low integrated information. The cortex, in contrast, has very high integrated information. If IIT is correct and Φ equates to consciousness, then we'd expect this very result: the cerebellum's low Φ means it contributes almost nothing to subjective experience while the cortex's high Φ means it contributes much.

However, per the unfolding argument, we could construct a robot that has a cerebellum that functions exactly as a human's cerebellum but has a very high Φ, and a cortex that acts just like a human's but has a Φ of zero. IIT suggests that removing the robot's cerebellum and not its cortex would have an impact on its consciousness, though we'd have no way to know, as the robot would act identically to a human with a removed cerebellum. Although the evidence supports IIT, it cannot be used to prove IIT.

Does this mean IIT is of no use? Of course not. Even if we can never prove that Φ is consciousness, we may find that it is a good indicator of human consciousness. It may be that consciousness is something else besides integrated information, but that whatever this other thing is, integrated information in the human brain changes more or less with it. Having an indicator of human consciousness would be very useful. Already, proxy measures of Φ are being used to predict whether patients in vegetative states are conscious or not. Here's how it works: send an electromagnetic shock into the patient's brain and listen to the resulting echo of activity. The more complex this echo, the higher the supposed integrated information and consciousness. Using these values turns out to be a good predictor of whether a patient in a coma-like state is truly in a coma or is in a locked-in state in which they are aware but cannot respond.

However, we cannot generalize these results outside of human brains. Even if Φ is a good indicator of consciousness in humans, it may not say anything about the consciousness of an AI. Perhaps Φ happens to change with consciousness in a human because of how our brains are structured and constrained, but has no correlation with consciousness in an artificial brain.

If we overlook this possibility and assume IIT is correct, then we'd believe that an AI like ChatGPT (which is a feedforward network) has no consciousness while a folded recurrent version that acts identically to it is conscious. It would be dangerous to assume this is the case, as we have not and cannot prove that Φ is consciousness. We may use Φ as a useful marker of consciousness in humans, but we can never turn to IIT in moral debates about AI.

The unfolding argument does not disprove IIT, but it does lay out important constraints as to what IIT can tell us about consciousness, whether our own or that of artificial intelligences. It tells us that the Integrated Information Theory of consciousness can never be proven and can never be used to inform our conversations about the ethics of artificial intelligences. At this pivotal point in human history, in which we may soon see the existence of human-like AI, it is crucial to understand the inner world of these creations as best we can.