Hands On Monotonic Time Series Forecasting with XGBoost, using Python

A couple of months ago, I was on a research project and I had a problem to solve involving time series.

The problem was fairly straightforward:

"Starting from this time series with t timesteps, predict the next k values"

For the Machine Learning enthusiasts out there, this is like writing "Hello World", as this problem is extremely well known to the community with the name "Forecasting".

The Machine Learning community developed many techniques that can be used to predict the next values of a timeseries. Some traditional methods involve algorithms like ARIMA/SARIMA or Fourier Transform analysis, and other more complex algorithms are the Convolutional/Recurrent Neural Networks or the super famous "Transformer" one (the T in ChatGPT stands for transformers).

While the problem of forecasting is a very well-known one, it is maybe less rare to address the problem of forecasting with constraints. Let me explain what I mean.

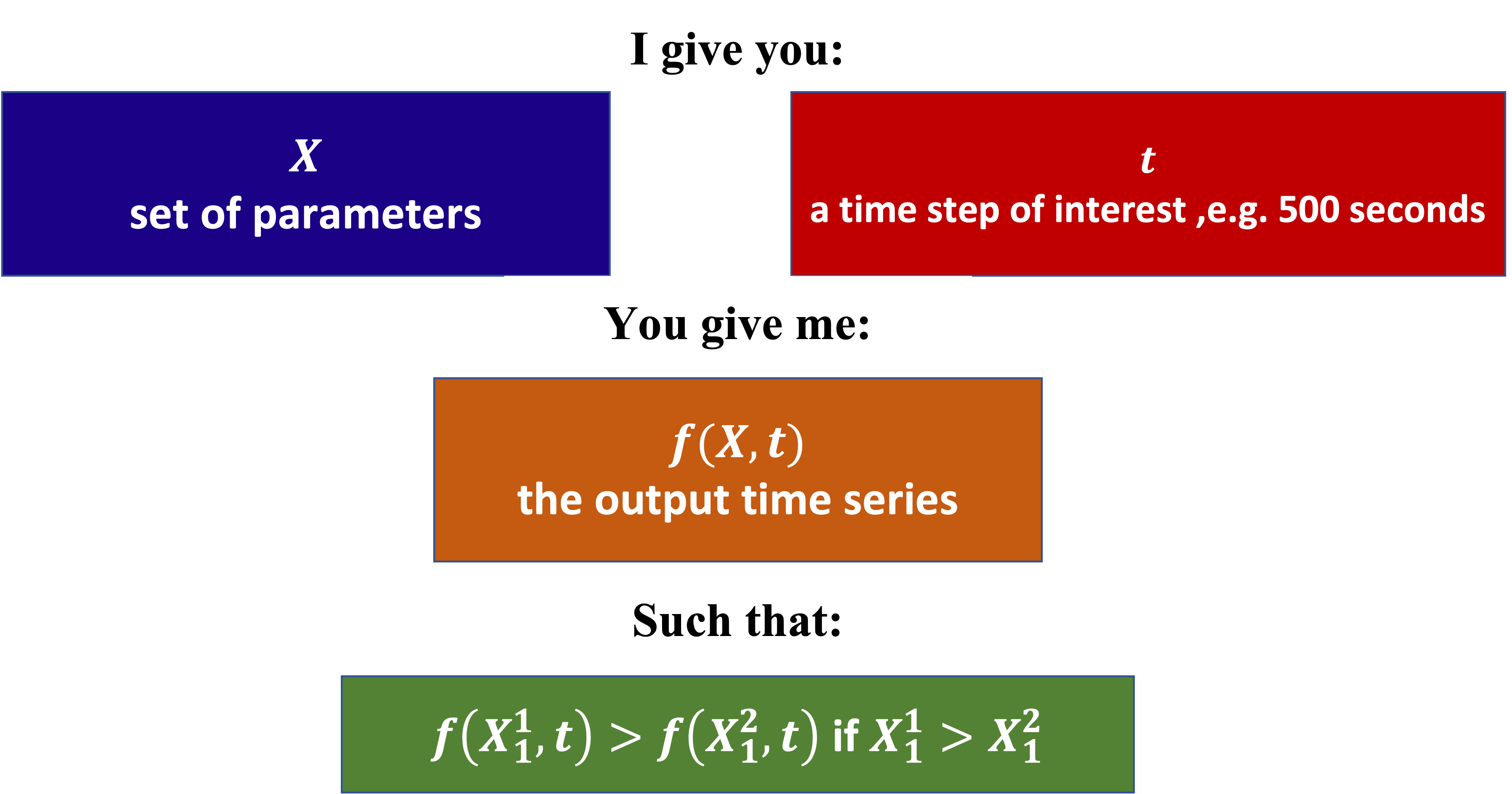

You have a time series with a set of parameters X and the time step t. The standard time forecasting problem is the following:

The problem that we face is the following:

So, if we consider that the input parameter has d dimensions, I want the function for dimension 1 (for example) to be monotonic. So how do we deal with that? How do we forecast a "monotonic" time series? The way that we are going to describe in this problem is the XGBoost.

The structure of this blogpost is the following:

- About the XGBoost: ** In a few lines we will describe what the _XGBoost is abou**_t, what is the fundamental idea, and what are the pros and cons.

- XGBoost Example: ** The XGBoost _code**_ will be described, from the Python description to a toy example.

- XGBoost Example with Monotonicity: The XGBoost will be tested on a real-world example.

- Conclusions: A summary of what has been said in this blog post will be given.

1. About the XGBoost

1.1 XGBoost Idea

The XG in XGBoost stands for extreme gradient (boosting). The "gradient boosting" algorithm aims to use a "chain of predictors". Given an input matrix X and the corresponding output y, the idea is that we have a bunch of predictors. The first predictor aims to directly find, from the input X, the corresponding output y. End of story. No I'm joking