Incorporate an LLM Chatbot into Your Web Application with OpenAI, Python, and Shiny

In a few days, I am leading a keynote on Generative AI at the upcoming Cascadia Data Science conference. For the talk, I wanted to customize something for the conference, so I created a chatbot that answers questions about the conference agenda. To showcase this capability I served the chatbot through a Shiny for Python web application. Shiny is a framework that can be used to create interactive web applications that can run code in the backend.

Aside from prototyping, an important application of serving a chatbot in Shiny can be to answer questions about the documentation behind the fields within the dashboard. For instance, what if a dashboard user wants to know how the churn metric in the chart was created. Having a chatbot within the Shiny application allows the user to ask the question using natural language and get the answer directly, instead of going through lots of documentation.

In this article, I will cover how a chatbot can be integrated and served within the Shiny for Python web application using OpenAI. Let's get started!

Code availability

Code for recreating everything in this article can be found at https://github.com/deepshamenghani/chatbot_shiny_python.

Create the Chatbot

In this article, I will make a basic chatbot so I can focus on its integration with the web framework of Shiny. However, if you are interested in learning how to create a fine-tuned chatbot, you can check out my previous article on the topic.

Set up the environment

I will import the following packages after installing them using pip install package_name.

from openai import OpenAI

import os

import dotenvConnect with OpenAI

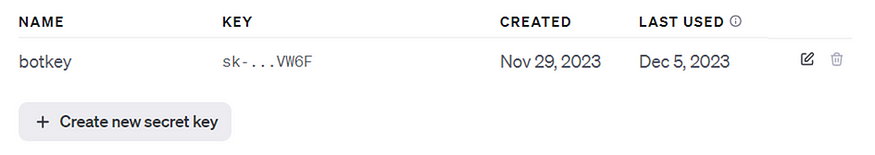

I will first set up an account on OpenAI Platform and generate a unique API key. I will store this API key in my .env file as OPENAI_API to access it.

I will now connect with the OpenAI client to start creating the skeleton of the chatbot.

dotenv.load_dotenv()

client = OpenAI(api_key=os.getenv('OPENAI_API'))I need to start by providing an initial instruction to the bot. For the simplicity of the example I am going to say "You are an assistant" but you can be creative here if you want your chatbot to have a personality.

conversation = [{"role": "system", "content": "You are an assistant."}]Let's have a Dialogue

I will create a simple dialogue chatbot now which should answer the question from the user that is stored as inputquestion. I will append this question to the initial conversation array.

conversation.append({"role": "user", "content": inputquestion})I will now use client.chat.completions.create to request OpenAI for response completion and store it as response. I will use the gpt-3.5-turbo model to keep my cost low while getting good enough responses, but feel free to play around with newer models that are getting released at the speed of lightning.

The initial response returned is extremely long with a lot of information, so I will extract actual response content string and store it in assistant_response.

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=conversation

)

assistant_response = response.choices[0].message.contentThe simple chatbot is ready. Before I start creating the Shiny application, I will encompass this response completion within a function called ask_question(), so that I can later call it from the web application.

This is what the final chatbot code looks like. I will save it in a file called conversationchatbot.py.

from openai import OpenAI

import os

import dotenv

dotenv.load_dotenv()

client = OpenAI(api_key=os.getenv('OPENAI_API'))

# Create an instruction for the bot

conversation = [{"role": "system", "content": "You are an assistant."}]

def ask_question(inputquestion):

# Append the user's question to the conversation array

conversation.append({"role": "user","content": inputquestion})

# Request gpt-3.5-turbo for chat completion or a response

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=conversation

)

# Access the response from the API to display it

assistant_response = response.choices[0].message.content

return assistant_responseAlright, let's test the function.

inputquestion = "What is customer churn? Respond in less than 10 words"

ask_question(inputquestion)Here's the response: "Customer leaving a business for a competitor or alternative.".

Shiny it up!

I will now move on to creating the Shiny for Python web application. At a high level, I want the user to have a space where they can input the question and a space where the answer will appear.

In Shiny for Python, there is a ui and server component within the app.py file. The ui contains the frontend of the application and the server logic handles the backend. In this article, I will cover the Shiny for Python components that are needed for the user and chatbot interaction.

If you want to learn how to build a Shiny for Python application from foundation to styling, you can check out my previous article – Learn Shiny for Python with a Puppy Traits Dashboard

Set up the environment

I will import shiny after installing it using pip install shiny. I will also import the conversationchatbot.py file so I have access to the function for response completion.

from shiny import App, ui, render, reactive

import conversationchatbot The UI

The basic components I will add to my ui:

- Title of the application: I will use

ui.h1()to add a heading for my application. - Description of the application: I will use

ui.markdown()to briefly mention what the application is for. - Input text area: I will use

ui.input_text()for the user to input their question. - Send button: I will use

ui.input_action_button()that should send the question to the response completion function to get an answer. - Output text area: I will use

ui.output_text()to create an area where the answer should appear.

app_ui = ui.page_fillable(

ui.page_auto(

# Title of the application with styling

ui.h1("Shiny Web Application Chatbot",

style="font-family: Arial, sans-serif; color: #0078B8; text-shadow: 2px 2px 4px #000000; font-size: 2.7em; text-align: center; padding: 20px;"),

# Description of the application

ui.markdown("This application allows you to ask questions and get answers using a conversational AI model."),

# Text input for user to enter their question

ui.input_text("question_input", "Please enter your question and press 'Send':", width="100%"),

# Button to send the question

ui.input_action_button("send_button", "Send", class_="btn-primary"),

# Card to display the answer

ui.card(

ui.output_text("answer_output", inline=True),

style="white-space: pre-wrap; width: 100%; margin: 3; padding: 10px;"

)

)

)The Server

When the user enters the question in the ui, I need to take the following steps:

- Access the user question on the server side using

input.question_input. - Access whether the send button has been clicked or not using

input.send_buttonand create a reactive step once the button has been clicked. - Send the question to the response completion function to get the response using

conversationchatbot.ask_question(). - Return the response to the

uito display in theanswer_outputarea.

def server(input, output, session):

@render.text

@reactive.event(input.send_button, ignore_none=False)

def answer_output():

# Get the user's question from the input

question = input.question_input()

if question:

# Get the response from the conversational AI model

response = conversationchatbot.ask_question(question)

return response

else:

# Return placeholder text if no question is entered

return "....."Once the ui and server code is complete, I will create the Shiny application.

# Create the Shiny app

app = App(app_ui, server)This is what the application looks like:

Getting OpenAI API key as user input

In my scenario, I may not always want the web application to use my OpenAI key as it can get costly. To solve for this, I will add a button that the user can click to add their own unique OpenAI API key before continuing to engage with the chatbot. This would require edits in both the ui and server, as well as the ask_question() function in conversationchatbot.py file.

ask_question()function: I will add an additional input beside the question calledapi_keyand connect with the OpenAI client within the function.

def ask_question(inputquestion, api_key):

# Initialize the OpenAI client with the provided API key

client = OpenAI(api_key=api_key)

...

...

...

return assistant_responseUI: I will add a button usingui.input_action_button()that the user can click to enter their API key.

# Click the button to add the API key

ui.card(

ui.input_action_button("apikey", "Enter OpenAI API Key to get started", class_="btn-warning")

)Server: I will create a reactive event which opens up a modal dialogue where the user can input their API key usingui.input_password. This reactive event should occur whenapikeyinput button is clicked. I will set the modal settings to close easily which means the user can click anywhere outside the modal once they have inputted the API key to close the box and continue engaging with the chatbot.

@reactive.effect

@reactive.event(input.apikey)

def _():

m = ui.modal(

# Input field for the OpenAI API key

ui.input_password("password", "OpenAI API key:"),

# Button to connect to OpenAI

ui.input_action_button("connect", "Connect to OpenAI"),

easy_close=True,

footer=None,

)

ui.modal_show(m) # Show the modalServer: Once the user inputs the API key, they can either click anywhere outside the box, or click on theconnectbutton in the modal pop up. I will add another reactive event that automatically closes the modal pop up when the user clicks onConnect to OpenAI.

# Remove the modal when the 'connect' button is pressed

@reactive.effect

@reactive.event(input.connect)

def __():

ui.modal_remove() # Hide the modalOnce these edits are made, this is what the application looks like:

What's next!

Most of the time when I interact with a dashboard, I want to know what certain terms mean, which leads me to the documentation page. I believe it is an exciting possibility to enhance the user experience of Shiny dashboards with the help of Llm agents. However, it is important to evaluate and test for accuracy as well as create a feedback loop to improve user experience.

Shiny also acts as a great prototyping tool for such applications and testing them out before serving them at their final destination. I hope this article was helpful in building out your own conversational bot experience.

Code for recreating everything in this article can be found at https://github.com/deepshamenghani/chatbot_shiny_python.

If you'd like, find me on Linkedin.

All images in this article are by the author, unless mentioned otherwise.