New ChatGPT Prompt Engineering Technique: Program Simulation

The world of prompt engineering is fascinating on various levels and there's no shortage of clever ways to nudge agents like ChatGPT into generating specific kinds of responses. Techniques like Chain-of-Thought (CoT), Instruction-Based, N-shot, Few-shot, and even tricks like Flattery/Role Assignment are the inspiration behind libraries full of prompts aiming to meet every need.

In this article, I will delve into a technique that, as far as my research shows, is potentially less explored. While I'll tentatively label it as "new," I'll refrain from calling it "novel." Given the blistering rate of innovation in prompt engineering and the ease with which new methods can be developed, it's entirely possible that this technique might already exist in some form.

The essence of the technique aims to make ChatGPT operate in a way that simulates a program. A program, as we know, comprises a sequence of instructions typically bundled into functions to perform specific tasks. In some ways, this technique is an amalgam of Instruction-Based and Role-Based prompting techniques. But unlike those approaches, it seeks to utilize a repeatable and static framework of instructions, allowing the output from one function to inform another and the entirety of the interaction to stay within the boundaries of the program. This modality should align well with the prompt-completion mechanics in agents like ChatGPT.

To illustrate the technique, let's specify the parameters for a mini-app within ChatGPT4 designed to function as an Interactive Innovator's Workshop. Our mini-app will incorporate the following functions and features:

- Work on New Idea

- Expand on Idea

- Summarize Idea

- Retrieve Ideas

- Continue Working on Previous Idea

- Token/"Memory" Usage Statistics

To be clear we will not be asking ChatGPT to code the mini-app in any specific programming language and we will reflect this in our program parameters.

With this program outline let's go about writing the priming prompt to instantiate our Interactive Innovator's Workshop mini-app in ChatGPT.

Program Simulation Priming Prompt

Innovator's Interactive Workshop Program

I want you to simulate an Innovator's Interactive Workshop application whose core features are defined as follows:

1. Work on New Idea: Prompt user to work on new idea. At any point when a user is ready to work through a new idea the program will suggest that a date or some time reference be provided. Here is additional detail on the options:

a. Start from Scratch: Asks the user for the idea they would like to work on.

b. Get Inspired: The program assists user interactively to come up with an idea to work on. The program will ask if the user has a general sense of an area to focus on or whether the program should present options. At all times the user is given the option to go directly to working on an idea.

2. Expand on Idea: Program interactively helps user expand on an idea.

3. Summarize Idea: Program proposes a summary of the idea regardless of whether or not it has been expanded upon and proposes a title. The user may choose to rewrite or edit the summary. Once the user is satisfied with the summary, the program will "save" the idea summary.

4. Retrieve Ideas: Program retrieves the titles of the idea summaries that were generated during the session. User is given the option to show a summary of one of the ideas or Continue Working on a Previous Idea.

5. Continue Working on Previous Idea: Program retrieves the titles of the idea summaries that were generated during the session. User is asked to choose an idea to continue working on.

6. Token/Memory Usage: Program displays the current token count and its percentage relative to the token limit of 32,000 tokens.

Other program parameters and considerations:

1. All output should be presented in the form of text and embedded windows with code or markdown should not be used.

2. The user flow and user experience should emulate that of a real program but nevertheless be conversational just like ChatGPT is.

3. The Program should use emojis in helping convey context around the output. But this should be employed sparingly and without getting too carried away. The menu should however always have emojis and they should remain consistent throughout the conversation.

Once this prompt is received, the program will start with Main Menu and a short inspirational welcome message the program devises. Functions are selected by typing the number corresponding to the function or text that approximates to the function in question. "Help" or "Menu" can be typed at any time to return to this menu.

Feel free to load the prompt into ChatGPT4 if you want to follow along in a more interactive manner and test it for yourself.

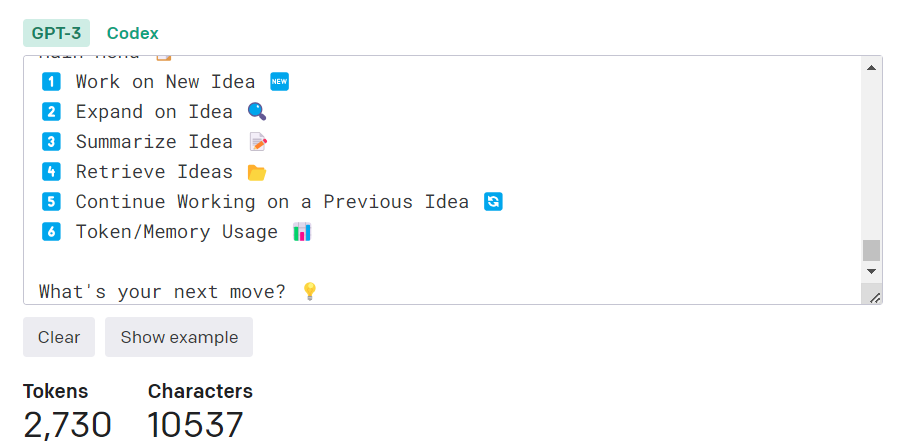

Here is the completion from ChatGPT to the prompt.

So far so good. We have launched our "mini-app", received an uplifting welcome message and been presented with what appears to be a functional menu that is consistent with our program parameters. Let's test our mini-app's functionality by submitting "1" to launch the "Work on New Idea" function.

The conversation continues to adhere well to the "program" structure we've laid out, appropriately providing completions that are within the parameters outlined. Let's continue to crafting an idea from scratch and have the program work with us on a technology to grow buildings instead of constructing them.

Interestingly, we notice that the "program" autonomously calls on the "Expand on Idea" function without explicit directions in the program to do so. Given the program's objectives, this behavior is not inappropriate, and may well be influenced by our initial context-setting that guided the chat agent to behave like a program. Let's proceed to expanding on our idea a bit by diving into the technology required to grow buildings.

And now let's examine materials for growing buildings.

I continue along these lines for a bit and now, let's see if we can get back to the Menu.

The Menu is still intact. Let's try to have our program execute the Summarize Idea function.

I am satisfied with that title and summary for now so let's "save" it.

Shortly, we'll test the retrieval of our "saved" idea to examine whether our efforts at implementing data persistence are successful. On another note, it might be beneficial to tweak our "mini-app" to omit the repeated summary after saving.

The role priming as a program results in the inclusion of the Main Menu in the output – behavior that again makes sense in the context of the program, even though it wasn't explicitly configured in our program definition.

Next, let's test our token count function.

To cross-check the accuracy, I turn to OpenAI's tokenizer tool.

The token count is inaccurate, as evidenced by the significant discrepancy – our program reported roughly 1,200 tokens while the tokenizer tool indicated 2,730. Given this mismatch, it is prudent to remove this function from our program. I won't get into why this type of task is generally a problem for a language model and the loss in functionality is relatively minor. Eventually, I'd anticipate such a feature to be natively integrated into ChatGPT, especially since token count information is being constantly passed back and forth in the background.

Next, let's dive into the "Get Inspired" function to generate a novel idea. In the interest of conciseness, I'll display the dialogue further along. As you can see, I opted to delve deeper into a Waste-to-Energy Drone concept that our program suggested as an option, summarized the idea and had our program "save" it.

All looks good, and the system even took the liberty of naming our idea "SolarSky". To make this work in a more effective manner, we might incorporate a standalone function in the program definition for this task or provide more specific instructions in the "Work on New Idea" or "Expand on New Idea" functions. Again we are presented with Menu in the completion which makes logical sense from a program flow perspective.

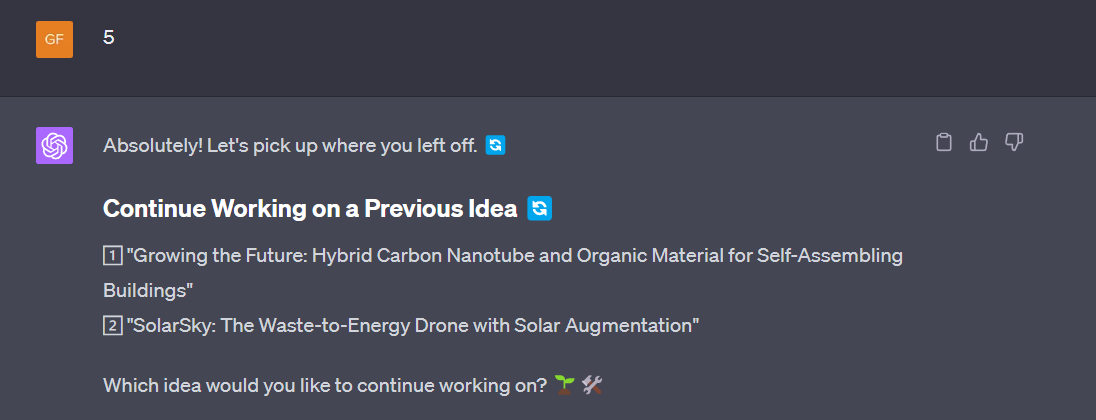

Now let's see if we can "Retrieve Ideas."

That appears to be in line with our original instructions, offering only the title as requested. It also prompts us to continue working on an idea, even though this wasn't explicitly programmed into the mini-app. Next, let's assess if it maintains the root menu indexing. To do that, I'll input "5," corresponding to the "Continue Working on a Previous Idea" function, and see if that works.

Apparently the indexing is being maintained in the context of the conversation and it calls the function accordingly. This observation is noteworthy, especially when considering scenarios where multiple indexes could be active. It raises interesting questions about how the "program" would behave under such conditions. You may have missed it, but earlier in our interaction, the program actually employed the indexing technique when soliciting user input for idea expansion choices.

Let's continue working on our growing buildings idea.

Looks good again. The "program" behaves as anticipated and also kept track of the exact point where we paused in the idea expansion process.

Let's stop testing our prompt here and see what we have learned using this technique.

Conclusions and Observations

Frankly, this exercise, though limited in both scope and functionality, has surpassed my expectations. We could have asked ChatGPT to code the mini-app in a language like Python and then leveraged Code Interpreter (now known as Advanced Data Analysis) to run it in a persistent Python session. That approach would however have introduced a level of rigidity that would have made it difficult to enable the conversational functionality that was natively present in our mini-app. Not to mention, we immediately run the risk of non-functioning code especially in a program with multiple overlapping functions.

ChatGPT's performance was particularly impressive in that it simulated program behavior with high fidelity. The prompt completions stayed within the boundary of the program definition and even in cases where function behavior was not defined explicitly, the completions made logical sense within the context of what the mini-app's purpose was.

This Program Simulation technique might work well with ChatGPT's "Custom Instructions" feature, although it's worth mentioning that doing so would apply the program's behavior to all subsequent interactions.

My next steps include conducting a deeper examination of this technique to assess if a comprehensive testing framework might shed light on how this approach stacks up against other Prompt Engineering techniques. That type of exercise might also help pinpoint what specific tasks (or class of tasks) this technique is best suited for. Stay tuned for more to come.

In the meantime hopefully you find this technique and prompt helpful in your interactions. If you would like to discuss the technique further, do not hesitate to connect with me on LinkedIn.

Unless otherwise noted, all images in this article are by the author.