Reduce your Cloud Composer bills

(Part 1)

Use Scheduled CICD pipelines to shut down environments and restore them to their previous state

Cloud Composer is an managed and scalable installation of the popular sophisticated job orchestrator Airflow. The service is available from the Google Cloud Platform (GCP) in 2 flavors: Cloud Composer 1 and Cloud Composer 2, the main difference being Workers Autoscaling that is available only in Cloud Composer 2.

For having been using the service for many years, I can definitely say that it's worth the try. Yet, some companies would steer clear of the service and the reason for that might not surprise you that much. Money.

In this writing, I'll be sharing an efficient way to reduce the bill of Cloud Composer. Though, the code snippets will only work for Cloud Composer 2, the strategy advocated still applies for Cloud Composer 1 users.

Please, note that this is the first part of 2 parts series. The second article can be consulted here.

Following are the main topics that will be covered:

Understanding Cloud Composer 2 pricing (Part 1)

Snapshots as a way to shut down Composer and still preserve its state (Part 1)

Creating Composer Environments using Snapshots (Part 1)

Summing Up (Part 1)

Destroying Composer Environments To Save Money (Part 2)

Updating Composer Environments (Part 2)

Automating Composer Environments Creation and Destruction (Part 2)

Summing Up (Part 2)

Understanding Cloud Composer 2 pricing

The main concept in Cloud Composer is that of an Environment. Basically, an environment corresponds to an instance of Airflow, with a name and a version. Each Environment is made of a set of Google Cloud services which usage incurs some cost. For instance, the Airflow metadata database is available inside each Environment as a Cloud SQL instance and the Airflow Scheduler is deployed in each Environment as a Google Kubernetes Engine Pod.

There are 3 main parts to Cloud Composer pricing:

- The Compute Cost: This is the cost of the Google Kubernetes Engine Nodes that run the Airflow Scheduler, Airflow Workers, Airflow Triggerers, Airflow Web Servers and other Cloud Composer components.

- The Database Storage Cost: This corresponds to the Cloud SQL Storage cost for the Airflow metadata database storage.

- The Environment Scale Cost: The environment scale is related to certain Cloud Composer components that are entirely controlled by Google Cloud. Those components' scale is automatically adjusted depending on the value that is set for the environment size parameter (small | medium | large). The Cloud SQL Instance for the Airflow metadata database, the Cloud SQL proxy and the Redis Task Queue are examples of those components.

A more detailed documentation on Cloud Composer 2 pricing is available here.

Snapshots as a way to shut down Composer and still preserve its state

When it comes to lowering the cost of using Cloud Composer, there aren't tons of ideas to try. The common route people would go is to try to adjust the size of the Environment in a way that there are no waste of resources. This implies using the minimum required amount of GKE Nodes for Airflow Workers and the smallest possible Cloud SQL instance, regarding the actual workloads that will be deployed on the Composer Environment.

Frankly, that's easier said than done. With its autoscaling feature, Cloud Composer 2 simplifies our life by making it possible to automatically downscale the Airflow Workers count to 1, when there is no much demand on the Environment. However, downscaling to zero is not supported and there is no way to stop or disable a Cloud Composer environment.

This is a serious pain point for many Cloud Composer users who might be under the impression of spending more money on Cloud Composer than they actually benefit. Actually, in most cases, development and test Composer Environments do not need to stay up in the night and in the weekend. What I mean is that keeping the non-production Environments up at all time is not economical but is what Cloud Composer users end up doing because there is not a native start & stop feature.

Google introduced Environments Snapshots in April 2022 as a preview feature which become Generally Available in December 2022. As the name implies, Environments Snapshots create snapshots of Cloud Composer Environments which can then be loaded to restore the Environment to the state when the snapshots were created.

Using this feature, it becomes possible to simulate a start & stop feature as the Environment can be destroyed and recreated without losing its state.

Note: Please, be aware of the fact that Cloud Composer Snapshots do not preserve the Airflow tasks logs

Here is the 3-steps secret recipe of how to drastically cut the Cloud Composer bill on the non-production environment:

- Create the environment and load the latest snapshot if any (There will be no snapshot to load when you first create the environment)

- Perform any update that you need on the environment

- Save a snapshot and destroy the environment, when you no longer needs it

In a professional context, the afore-mentioned steps would be executed using a CICD pipeline and this is exactly what will be covered in the upcoming sections.

Creating Composer Environments using Snapshots

Let's say we want to create the Composer development Environment each day of the week, except the weekend, at 7 a.m and then destroy it each day at 9 p.m. We will need to follow the steps below:

- Create the Cloud Storage Backup Bucket and the Environment Service Account

- Create a repository in Cloud Source Repositories to hold the Environment Creation pipeline

- Configure a Cloud Build Trigger to run the Environment Creation Pipeline

Note: You need to have gcloud installed before proceeding. If that is not the case, please, refer to the the gcloud installation guide

Step 1: Create the Cloud Storage Backup Bucket and the Environment Service Account

Are you asking why ? The Cloud Storage bucket will store the Environment snapshots and tasks logs, which are not saved as part of a snapshot

gsutil mb gs://-europe-west1-backup As for the environment service account, it is a good practice to use a user created service account with the minimum required permissions, following the so-called least privilege principle. The account will be given the Composer Worker role.

# Enable the Composer Service

gcloud services enable composer.googleapis.com

# Create the Environment service account. Name it "sac-cmp"

gcloud iam service-accounts create sac-cmp

# Add the role Composer Worker to the sac-cmp service account

gcloud projects add-iam-policy-binding

--member serviceAccount:sac-cmp@.iam.gserviceaccount.com

--role roles/composer.worker

# Add the role Composer ServiceAgentV2Ext to the Composer Agent

# Watch out, do not confuse the Project ID with the Project Number

gcloud iam service-accounts add-iam-policy-binding sac-cmp@.iam.gserviceaccount.com

--member serviceAccount:service-@cloudcomposer-accounts.iam.gserviceaccount.com

--role roles/composer.ServiceAgentV2Ext Note: In addition, the Cloud Composer Service Agent is given the Service Agent V2 Ext Role on the user-created environment service account

This is it for the pre-requisites. The next step is to create a repository in Cloud Source Repositories to hold the Environment Creation pipeline.

Step 2: Create a repository in Cloud Source Repositories to hold the Environment Creation pipeline

Let's take a detour and introduce Cloud Source Repositories (CSR), which is a private Git repository hosting service offered by Google Cloud. Actually, in order to run the Environment creation CICD pipeline, we need to create a Cloud Build Trigger, which works by cloning the content of a Git repository. Cloud Build supports many popular Git repository hosting services like BitBucket, Github and Gitlab. For the sake of simplicity, this article uses CSR as the Cloud Build Git repositories source.

Before being able to create any Git repository inside CSR, some pre-requisites have to be taken care of. In a nutshell, we need to enable the CSR API and configure Git to be able to interact with CSR

# Enable the CSR API

gcloud services enable sourcerepo.googleapis.com

# Configure Git. Make sure git is installed before

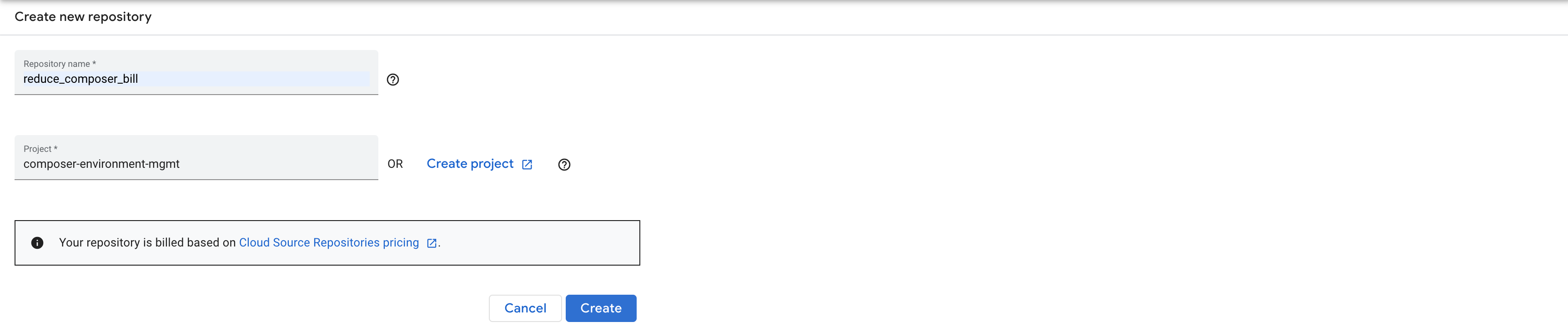

gcloud init && git config --global credential.https://source.developers.google.com.helper gcloud.shNow, we can go ahead and create the CSR Git repository _reduce_composerbill which will hold the Environment creation CICD pipeline. For that we need to go to source.cloud.google.com and click on Get started and then Create repository button

Then Choose Create new repository and __ Click _Continu_e

Then Name the repository _reduce_composerbill and Choose the GCP project where you want the CSR Git repository to be created.

Warning: Please do not use composer-environment-mgmt as that won't work for you. Use your own GCP project.

The next thing to do is to clone this Gitlab repository on your computer and push its content to the CSR repository _reduce_composerbill. Before pushing to the CSR repository, edit the 3 files _createenvironment.yaml, _destroyenvironment.yaml and _updateenvironment.yaml by replacing the _PROJECTID and _ENVNAME variables by the name of the GCP project and the name you wish to give the Composer Environment respectively.

Note: The variables PROJECT_ID and ENV_NAME might appear multiple times in some of the 3 files

# Clone the Git repository

git clone [email protected]:marcdjoh/reduce_composer_bill.git

# Push the edited files into the CSR repository reduce_composer_bill

# To do that, follow the instructions in the CSR console

Step 3: Configure a Cloud Build Trigger to run the Environment Creation Pipeline

Cloud Build is the Google Cloud service for Continuous Integration and Continuous Deployment (CICD). The Environment Creation Pipeline does 3 things:

- It creates an Environment

- It loads the latest snapshot available if any

- It restores the Environment tasks' logs

steps:

- name: gcr.io/cloud-builders/gcloud

entrypoint: /bin/bash

id: 'Create environment'

args:

- -c

- |

set -e

# This is an example project_id and env_name. Use your own

project_id=reduce-composer-bill

env_name=my-basic-environment

gcloud composer environments create ${env_name} --location europe-west1

--project ${project_id} --image-version=composer-2.1.10-airflow-2.4.3

--service-account sac-cmp@${project_id}.iam.gserviceaccount.com

- name: gcr.io/cloud-builders/gcloud

entrypoint: /bin/bash

id: 'Load Snapshot'

args:

- -c

- |

set -e

# This is an example project_id and env_name. Use your own

project_id=reduce-composer-bill

env_name=my-basic-environment

if gsutil ls gs://${project_id}-europe-west1-backup/snapshots/* ; then

snap_folder=$(gsutil ls gs://${project_id}-europe-west1-backup/snapshots)

gcloud composer environments snapshots load ${env_name} --project ${project_id}

--location europe-west1

--snapshot-path ${snap_folder}

else

echo "There is no snapshot to load"

fi

- name: gcr.io/cloud-builders/gcloud

entrypoint: /bin/bash

id: 'Restore Tasks Logs'

args:

- -c

- |

set -e

# This is an example project_id and env_name. Use your own

project_id=reduce-composer-bill

env_name=my-basic-environment

if gsutil ls gs://${project_id}-europe-west1-backup/tasks-logs/* ; then

dags_folder=$(gcloud composer environments describe ${env_name} --project ${project_id}

--location europe-west1 --format="get(config.dagGcsPrefix)")

logs_folder=$(echo $dags_folder | cut -d / -f-3)/logs

gsutil -m cp -r gs://${project_id}-europe-west1-backup/tasks-logs/* ${logs_folder}/

else

echo "There is no task logs to restore"

fiThe build is triggered by the Cloud Build service account. Thus, we add the Project Editor role to the Cloud Build service account so that it can create, (and also destroy and update) the Composer Environment and also copy files to Cloud Storage buckets. Finally, we create a Cloud Build trigger to run the environment creation pipeline.

# Add the project editor role to the Cloud Build service account

gcloud projects add-iam-policy-binding

--member serviceAccount:@cloudbuild.gserviceaccount.com

--role roles/editor

# Create a Cloud Build trigger for the Environment creation CICD pipeline

gcloud builds triggers create manual --name trg-environment-creator

--build-config create_environment.yaml --repo reduce_composer_bill

--branch main --repo-type CLOUD_SOURCE_REPOSITORIES Summing Up

This article is the first part of a 2 parts series which is goal is to elaborate on an efficient way to reduce Cloud Composer bill for all Cloud Composer users. The strategy relies heavily on saving and loading Environments Snapshots to be able to shut down non-production Environments without losing their state.

The CICD pipelines code is available in this Gitlab repository. Feel free to check it out. Also, the second part of the series can be consulted [here](http://The second part of the series can be consulted here.).

Thank you for your time and stay tuned for more.